#While the number of coordinates is less than that of training samples () draws samples from a uniform distribution. # ulmn_stack() stacks 1D arrays as columns of a 2D array.Ĭoordinates = np.column_stack( (np.random.uniform(size=1)/2,

Num_train = 200 # number of training samples Range parameter which controls how fast the functions sampled from the Sigma2_1 = 0.35 # marginal variance of GP

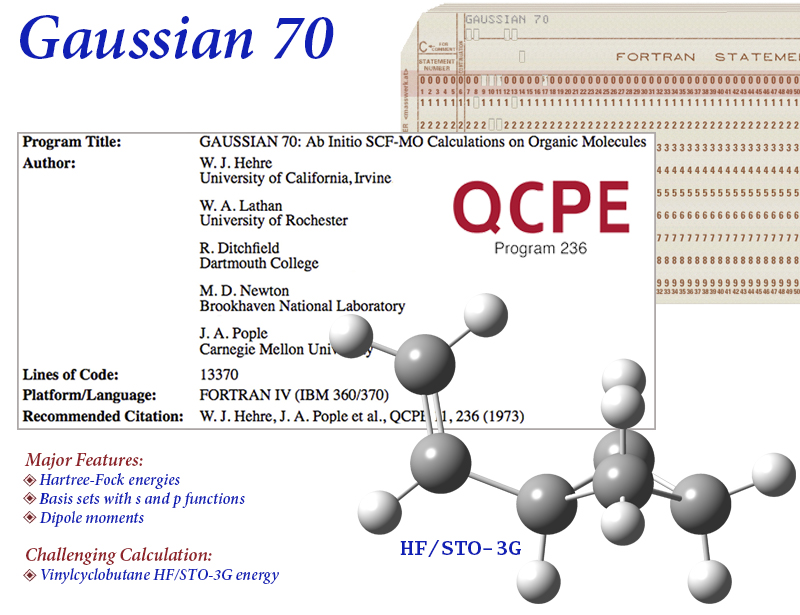

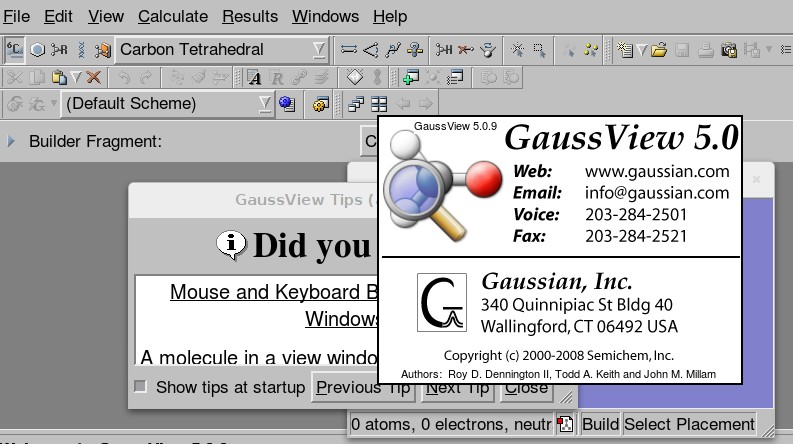

GAUSSIAN SOFTWARE RICHTER LIBRARY INSTALL

Install SHAP ( SHapely Additive ex Planations) library for explaining output of the GP model.

GAUSSIAN SOFTWARE RICHTER LIBRARY CODE

Step-wise explanation of the code is as follows: The code has been implemented using Google Colab with Python 3.7.10, shap 0.39.0 and gpboost 0.5.1 versions. Here’s a demonstration of combining Tree-Boosting with GP models using GPBoost Python library. Trees then learn using the library LightGBM. In simple terms, the GPBoost algorithm is a boosting algorithm that iteratively learns the hyperparameters using natural gradient descent or Nesterov accelerated gradient descent and adds a decision tree to the ensemble using Newton and/or gradient boosting. Training the GPBoost algorithm refers to learning the hyperparameters (called covariance parameters) of the random effects and F(X) using an ensemble of decision trees. Zb: random effects which can comprise Gaussian process, grouped random effects or a sum of both X: covariates/ features/ predictor variablesį: non-linear mean function (predictor function) The label/response variable for GPBoost algorithm is assumed to be of the form: Robust to multicollinearity among variables used for prediction as well as outliers.Can automatically model discontinuities, non-linearities and complex interactions.universality) for uniform transformations of the feature variables used for prediction.

Tree boosting provides scale-invariance (i.e.Can handle missing values on its own while making predictions.finding a model which can describe dependencies among variables. Enable making probabilistic predictions for quantifying uncertainties.Tree-boosting and GP, two techniques are achieving state-of-the-art accuracy for making predictions have the following advantages, which can be combinedly leveraged using GPBoost. Though it combines tree-boosting with GP and mixed-effects models, it also allows us to independently perform tree-boosting as well as using GP and mixed-effects models. Originally written in C++, the GPBoost library has a C-language API. Read in detail about mixed-effects models here. Mixed-effects models are statistical models which contain random effects (model parameters are random variables) and fixed effects (model parameters are fixed quantities). As the algorithm proceeds, it learns from the residual of the preceding trees. In tree-boosting, each of the trees in the collection is dependent on its prior trees. Tree-boosting or boosting in decision trees refers to creation of an ensemble of decision trees for improving the accuracy of a single tree classifier or regressor. Visit this page for a detailed description of GP. It is a probabilistic distribution over functions possible for resolving uncertainty in Machine Learning tasks such as regression and classification. Gaussian process (GP) is a collection of some random variables such that each finite linear combination of those variables has a normal distribution.

0 kommentar(er)

0 kommentar(er)